Professional Graphics Cards versus Consumer Cards:

As A Working Professional What Cards Give You the Performance You Need?

One thing that has always bothered me is that no one seriously compares workstation video cards to desktop video cards. As a technology consultant for a small company, it can be very frustrating to not have all the data required to make an informed decision. Many people attribute the lack of research into this area to the idea that the two types of cards are manufactured to perform different types of tasks and that comparing them would be analogous to comparing two dissimilar objects. Still others argue that the target markets for the cards are so unalike that there is little crossover. Anecdotally, the experience of this author differs from those ideas. Thus, the reason for this article was created.

This really becomes a story about price discrimination. The idea of price discrimination is a fairly simple economic phenomenon to explain. For example, Adobe makes a very nice photo editing software called Photoshop. Adobe prices this product based on the cost of producing it and the price they expect to be able to sell it for based on the demand schedule for Photoshop. This puts the price of Photoshop around $700. The main rational for this pricing scheme is that most users of Photoshop need it; it can actually be tied to their livelihood. This means that these purchasers, whether they are companies or individuals, have what is called a low elasticity of demand meaning that they will most likely pay whatever is necessary to procure the product. Others, however, view Photoshop as a luxury or novelty and would like it but don’t want to pay $700 for it. (For the purposes of this article piracy is going to be ignored.) Adobe realizes there is demand for their product at these lower prices and there is profit to be had there. However, if they lower the price for Photoshop as a whole the extra demand could create less profit when everyone buys at the lower price. Enter a price discrimination scheme. Adobe has to figure out a way to sell the cheaper Photoshop only to the people who value it less (people with a higher elasticity of demand). The trouble is that Adobe has no way of knowing who to offer a discount to and who to charge full price. Adobe cannot simply ask customers how much they value the product and hope that people answer honestly. Thus they release a crippled version of Photoshop called Photoshop Elements to have the consumers sort themselves. The consumers with the lowest elasticities of demand will be the ones that need the features that were cut out for Photoshop Elements and will still buy the fully featured, full priced product. Those that just want to do some casual photo editing will be more likely to purchase the “dumbed down” Photoshop Elements. There is some room for arbitrage here as some of the people that were buying the full version may realize that the $100 version is all they need. Adobe will not care as long as the extra profit from the new transactions for Photoshop Elements overrides any profit lost from this arbitrage. Price discrimination of this sort is quite common with all software manufacturers. However, price discrimination is not just relegated to the software industry or even the computer industry. Airlines have offered business class tickets that are fully refundable as opposed regular tickets which in many cases are not. Intel and AMD force you to buy a more expensive processor if you want more than one processor on a motherboard. Of course, the subject of this article, video card manufacturers, also price discriminate.

The question is, are the professional video cards different enough to warrant their price premium? Seven graphics cards were put to the test to answer that question.

The Candidates

First, let it be known that all the cards in this article are NVIDIA based. This is not done out of some malice towards NVIDIA or AMD. This is simply a logistical circumstance as all the cards that were available for testing were NVIDIA cards. A little background information needs to be divulged. The office that provided most of the cards tested does visual effects and animation for television shows and corporate videos. Most of the work takes place in Maya, 3dsMax, and Fusion. The reason that all of the cards are NVIDIA based is that the office has a bias towards NVIDIA so those are the only cards available to test with. With that said, let’s examine the video cards in the test.

GPU |

Quadro FX 560 |

Quadro FX 1700 |

Quadro FX 3400 |

GeForce 7900 |

GeForce 7950 |

GeForce 8800 GT |

GeForce GTX 280 |

Core |

G73 |

G84 |

G70 |

G71 |

G71 |

G92 |

GT200 |

Raster Operations Pipeline (ROPs) |

4 |

8 |

8 |

16 |

16 |

16 |

32 |

Core Clock |

350MHz |

460MHz |

350MHz |

500MHz |

600MHz |

600MHz |

602 MHz |

Shader Clock |

N/A |

920MHz |

N/A |

N/A |

N/A |

1500MHz |

1296MHz |

Memory Clock |

600MHz |

400MHz |

900MHz |

750 MHz |

725MHz |

900MHz |

1107MHz |

Memory Bus Width |

128-bit |

128-bit |

256-bit |

256-bit |

256-bit |

256-bit |

512-bit |

Frame Buffer |

128 MB |

512 MB |

256 MB |

256 MB |

512 MB |

1024 MB |

1024 MB |

Memory Type |

GDDR |

GDDR2 |

GDDR3 |

GDDR3 |

GDDR3 |

GDDR3 |

GDDR3 |

Transistor Count |

177 million |

289 million |

222 million |

278 million |

278 million |

754 million |

1400 million |

Manufacturing Process |

90nm |

80nm |

130nm |

90nm |

90nm |

65nm |

65nm |

Price Point Jan. 2010 |

$85 |

$450 |

$140 |

$50 |

$100 |

$212 |

$350 |

Price Point at Time of Purchase |

$270 |

$440 |

~$3000 |

$160 |

$265 |

$240 |

$375 |

Time of Purchase |

Dec. 2006 |

Dec. 2008 |

Jun. 2005 |

Dec. 2006 |

Dec. 2006 |

Jun. 2008 |

Dec. 2008 |

*All values are as reported by CPU-Z at the time of running the test or information gathered from techarp.com

**Prices listed from Jan. 2010 are from Newegg.com unless the item is no longer stocked in which case the same model card was found on Google shopping with the most appropriate price listed.

Quadro FX 560

The FX 560 is based on the G73 graphics processing unit (GPU). The core clock is 350 MHz (mega hertz). CPU-Z also reports the memory clock as 600 MHz. The FX 560 has a memory bus width of 128 bits but only 128 MB (mega bytes) of video RAM (Random Access Memory). The VRAM (video RAM) is also only GDDR. These specifications make it decidedly the weakest card in the group. The company originally bought the card in December of 2006 for $270. It was assumed at the time that it would perform better in 3dsMax and Combustion than the GeForce 7900. (On a side note it did perform on par with a GeForce 6600 in gaming at the time of purchase.)

Quadro FX 1700

This is the newest Quadro card in the office. There are actually quite a few of them as we were operating under the same assumption as everyone else in the industry, the Quadro cards would be better equipped for the work performed in the office. With no real ability to test the cards before we purchased, we decided to err on the side of caution and go with professional cards even though they cost more than the gaming cards on the market at the time. More specifically the FX 1700 is based on the G84 GPU with a core clock of 460 MHz, shader clock of 920 MHz, and a memory clock of 400 MHz. The memory standard of GDDR2 his is a bit confusing as, with the exception of the FX 560, every other card in the test exhibits GDDR3, even the much older FX 3400. However, the FX 1700 does have 512 MB of video RAM so it may be that at the time of manufacturing it was more economical to include a higher quantity of lower performance RAM. These cards were $440 at the time of purchase in December of 2008. It is interesting to note that these cards are now, at the time of this writing (January 2010), $450.

Quadro FX 3400

CPU-Z reports that the FX 3400 in the office is based on the G70 GPU, though Wikipedia reports that it is using a NV45GL core which would make it a GeForce 6000 series contemporary. The core clock is running at 350 MHz. According to CPU-Z the memory is running at 900 MHz, however, according to all the other documentation on the internet, the memory runs at 450 MHz. This card is the only Quadro in the bunch with a 256 bit memory bus and 256 MB of GDDR3 and it is the only one to require a 6-pin power connector. Moreover, it is also the oldest of the entire line of cards being tested as evidenced by its 130 nm (nanometer) manufacturing process. The records of the purchase of the card are a little sketchy but one individual involved recalls paying about $3000 for the card in June of 2005.

GeForce 7900GT

The 7900GT “boasts” a G71 core clocked at 500 MHz with the memory clocked at 750 MHz. It is also the lowest end gaming card that was available. It being based on the G71 suggests that it may be a good comparison card for the FX 560 or the FX 3400 if CPU-Z is to be believed about the latter’s origins. The purchase price of $160 does give it a good price advantage over the FX 560 that was purchased in the same time period. The GeForce 7900 also has a 256-bit memory bus which gives the impression that the FX 560 may be out classed and that the FX 3400 may be the more similar card.

GeForce 7950GT

The 7950 is essentially a 7900 with a faster core and slightly slower memory. It also has double the memory at 512 MB instead of 256 MB. The price point at time of purchase makes it a good foil for the FX 560, but other than that it is just another G71 thrown into the mix. Ideally this card will perform better with lager scene files than the 7900.

(Yes I just reused the 7900GT photo; they look the same except the 7950 has “7950” where the “7900” was printed.)

GeForce 8800GT

As there were no other G8x GPUs available for testing, the 8800GT is used as a foil to the FX 1700. This particular 8800GT has a G92 core. The 8800GT has the advantage over the 7000 series cards with 2.7 times the number of transistors and a higher memory clock. Compared to the FX 1700, the 8800GT has twice the memory and memory bus width. The 8800GT also was $200 cheaper than the FX 1700 six months prior to the latter’s purchase. As the 8800GT was built on a 65nm process and the FX1700 was built on an 80nm process, there can obviously be no direct comparison based on hardware specifications alone.

GeForce GTX 280

Finally we have the GeForce GTX 280. At the time of purchase it was the most powerful gaming card on the market. Ironically it was purchased at the request of an employee who did not want an FX 1700. The GTX 280 boasts an incredible 1.4 billion transistors, a 512-bit memory bus width, and 1024 MB of GDDR3 RAM. Even more ironically it was $75 cheaper, but it does require a 6-pin AND an 8-pin power connector.

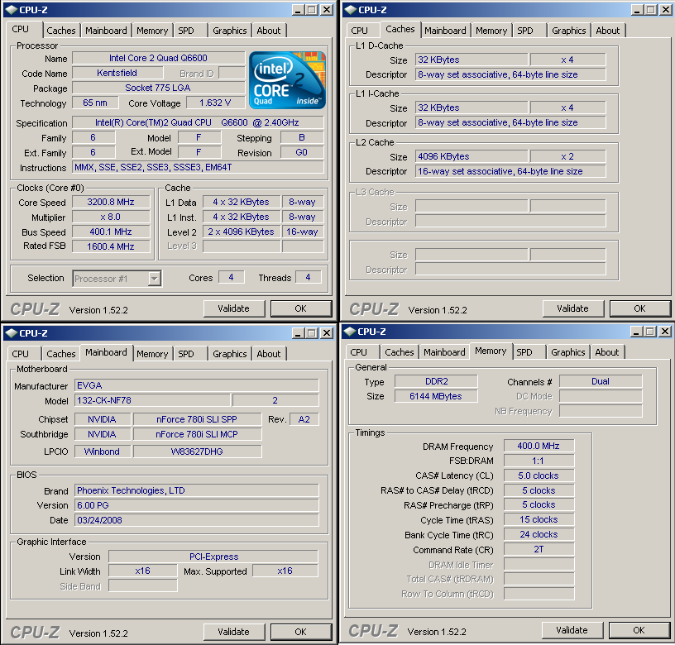

The Test Rig

The first thing to notice about the test setup is that an overclocked CPU (central processing unit) is used. This setup ran for 12 hours on Prime 95 the day before testing began to ensure stability. Although to be honest this was mostly to test the extra 2GB (gigabytes) of RAM that was added for the tests. The rest of the computer had been operating at this frequency for over a year.

Failing to have the funds to procure a solid state disk (SSD), a 10,000 RPM drive was used instead. Windows XP Pro x64 was used as the operating system because that is more likely to be found in an office setting of this kind. The reason for this is that there are many plugins for programs that will not function outside of Windows XP. Since Windows XP is notorious for mishandling how a page file is used, most people suggest turning it off if you have enough RAM. This technique generally works, but there are several programs that crash without a Windows page file. That leaves two options:

Option A: Create a small page file on a hard drive to force Windows to use more physical RAM. The drawback to this is that anything that does make it to the page file will be accessed only as fast as a hard drive can go and Windows may constantly complain about not having enough virtual memory.

Option B: Create a drive out of RAM and use that as storage for the page file. The latter option was chosen. (It actually works rather well; I set it up so that 1 GB out of the 6 became drive E and the set the page file to be always equal to 1 GB on the E drive. I also experimented with installing PCMark05 to the RAM drive and the score went from 10000 to 20000.)

One person suggested that I conduct the tests under the 32 bit version as well since many in CAD users were still running that. Unfortunately the time allotted for testing did not allow for all of the tests to be repeated in a 32 bit environment. Also, the purpose of the article is to test the differences between the cards and that is best done if the programs are not hampered in some other way besides the video card.

The newest drivers were not used for testing the GeForce cards. This was partly due to oversight and partly due to a lack of need. Once the first series of tests was complete, it was clear that the 175.16 drivers presented no problems in all four of the programs used. There was an ironic driver issue with the Quadro cards, but that will be covered later.

Finally, the setup always uses two monitors. One is a CRT that allows a nice, high resolution like 1600x1200. The other is a standard 4:3 19 inch LCD running at 1280x1024. The reason for this is most professionals have the viewport on one screen and all the tools and other gadgets on the other. Also, there is a lot of conjecture out there that “if you are going to run two monitors hard you need a Quadro.”

CPU |

Intel Core 2 Quad Q6600 @3.2 GHz (8x400) |

Motherboard |

EVGA 132-CK-NF78 |

Power Supply |

Rosewill RX-850-D-B (850 Watts) |

Hard Disk |

Western Digital VelociRaptor 300 GB (10000 RPM) |

Memory |

G. Skill DDR2-800 2 x 2GB (5-5-5-15) Patriot DDR2-800 2 x 1GB (5-5-5-15) (All @400 MHz) |

Video Cards |

NVIDIA Quadro FX 560 |

|

NVIDIA Quadro FX 1700 |

|

NVIDIA Quadro FX 3400 |

|

NVIDIA GeForce 7900 |

|

NVIDIA GeForce 7950 |

|

NVIDIA GeForce 8800GT |

|

NVIDIA GeForce GTX 280 |

Video Drivers |

191.78_Quadro_winxp_64bit_english_whql.exe |

|

175.16_desktop_winxp_64bit_english_whql.exe |

Operating System |

Windows XP Professional x64 |

Monitor Resolution |

1600x1200 |

|

1280x1024 |

The Method

One thing many articles lack is a clear documentation on the methods used for testing. This brings many readers to think that the findings are suspect and not to be trusted. A thorough explanation of the methods used to test the cards follows.

Maya

Many people operate under the idea that video cards improve rendering performance. Therefore, some of the tests were designed to test this theory as well. The first test in Maya is one of them.

Maya is the primary modeling and animation software used at the office now. It is used quite frequently for visual effects and animation in the entertainment industry and therefore it was decided to be included in the tests.

First the computer is rebooted. Then Maya 2009 x64 was launched. A scene using nParticles and fluids to approximate a meteor entering an atmosphere is opened. The Maya scene file can be found here. The fluid does not have a cache file, so the scene is first simulated from frame 1 to frame 24. Frame 24 was chosen for it’s longevity of render time and no other reason. Once on frame 24, a render window is opened. The render is then set to mental ray and the quality preset “Production” is selected. The frame is then rendered at 960x540. The time for render is recorded and the render is started again. This process is repeated until 5 renders were complete. A sample frame follows.

After the mental ray renders are complete, Maya Software is chosen to render. This is because fluids are meant to be rendered in Maya Software. The quality setting is set to the “Production quality” preset and the frame is set to render. The time for rendering is recorded and the render repeated until 5 frames are rendered. A sample frame follows.

The next tests were designed to test the capabilities of the graphics hardware in the viewport.

A high complexity scene is loaded. Screen shots and a copy of the file are unavailable because the company that provided the file can not release that information yet. The scene contains two complete human models in a cauldron with some vegetables. The human models include the internal organs, skeletal system, muscular system, and nervous system. All objects are unhidden. Solids are set to be shaded without wireframe on top, and finally the button for high quality in the viewport is ticked. This makes Maya try to show the scene in the viewport with the lighting scheme that is in the scene, with textures, highlights, and shadows. In the end, the scene has over 7 million triangles and just barely fits into the 5GB of free RAM. FRAPS is then started. FRAPS benchmark is set to stop after 60 seconds. Once the benchmark is started the camera is rotated randomly around the scene for the next 60 seconds while the benchmark runs. This test is repeated 5 times to try to eliminate potential outliers in the data. The series of 5 tests is then repeated again with the High Quality viewport option off.

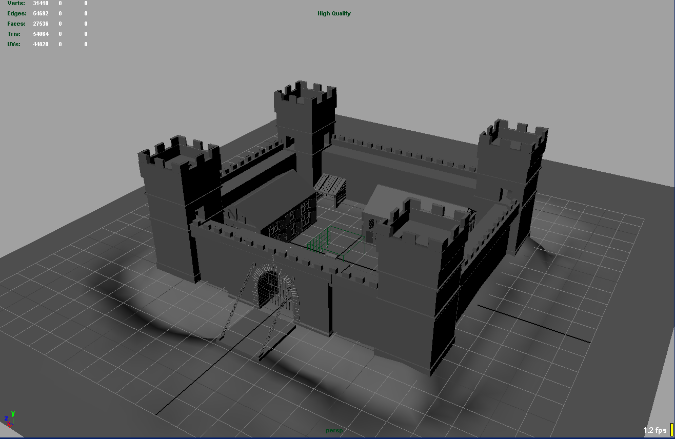

Once that series of tests is complete a less complex scene was loaded. This is a free scene found on the internet, you can find it here. This scene involves a castle, a draw bridge and a small moat. The scene is completely untextured and contains a mere 54 thousand triangles.

The same procedure is performed as in the first scene. The high quality button is ticked and the screen rotated randomly for five 60 second intervals while frame rates are recorded. Finally, high quality is turned off and the tests were repeated once more.

3ds Max

3ds Max is the first program the company used for creating all of its visual effects and animations, however, its inability to natively handle fluids and the desire to migrate to a node based workflow led to it getting replaced by Maya. Nonetheless, many video game production companies use 3ds Max for their game design and thus needed to be included in the test suite.

First the computer is restarted. Then 3ds Max 2009 x64 is loaded. The high complexity scene is then loaded. Screen shots of the final render or a copy of the scene are unavailable upon the company’s request. The scene involves the interior of a museum-like building. There are two pools of water on opposite sides of the camera with lights coming through the bottom. The ceiling is lined with half cylinder lights. 3ds Max reports there to be 453 thousand polygons in the scene. The render options are opened and global illumination is turned on. A render of frame 1 is started. The time is recorded and the render is restarted until 5 frames have rendered and their times recorded. FRAPS is then started. As with Maya the camera is then rotated around the scene randomly for five 60 second intervals and the frame rates are recorded.

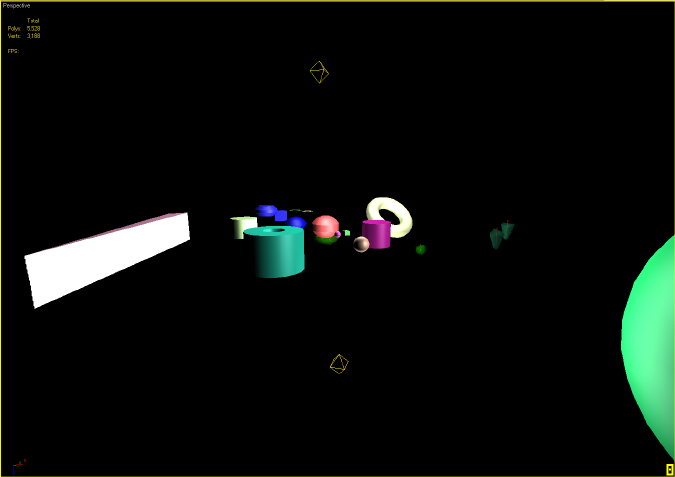

Next a low complexity scene is loaded. It is comprised of three omni lights situated around an amalgam of 3D primitives. It is a scene made solely for the purposes of this article. It may be found here.

The complexity of this scene is greatly reduced when compared to the first. This scene has a very low number of lights and only 5500 polygons. Like with the other tests, FRAPS is started and the camera is rotated randomly around the scene for five 60 second sessions.

Revit Structure

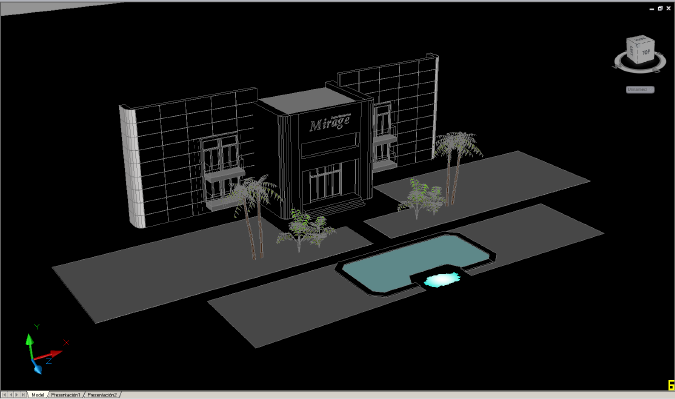

Revit is an architecture/civil engineering program (Structure is for civil engineering) that is used to design buildings. This program was chosen to test real world CAD like situations with highly complex models and lots of right angles.

First the computer is restarted. Revit Structure 2010 x64 is then launched. There is only one building file that is loaded as it contains both the complex test and the simple test. Screen shots and a copy of the file are unavailable to the public. Suffice it to say that the same procedures that were used with 3ds Max and Maya, with the exception of rendering, are applied to both the full version of the building and a partial version. Each time there are five tests of one minute each.

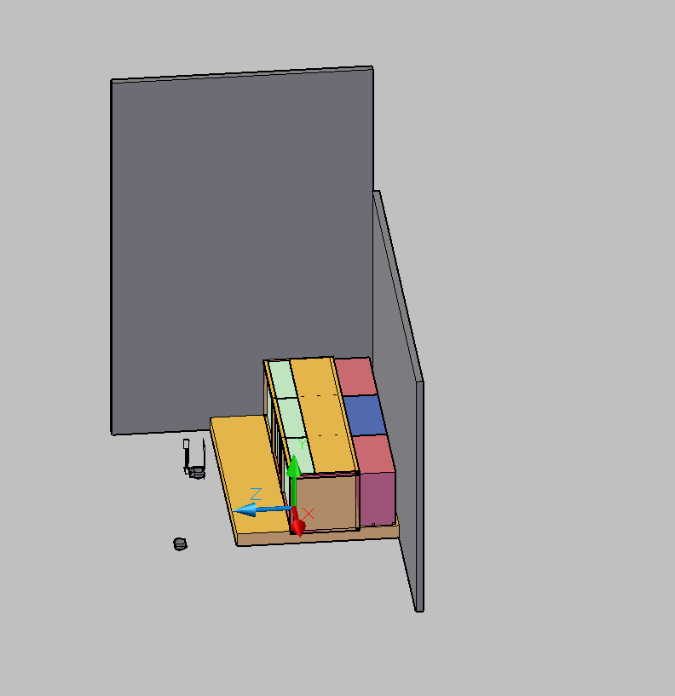

AutoCAD

AutoCAD was sort of an afterthought. Luckily it was thought of before testing on the first card was complete. In researching the Quadro cards, the term “CAD card” kept coming up thus the decision was made to include AutoCAD in the hopes that if no where else, some performance gain from Quadro cards would most certainly be seen here. Two drawings were downloaded. There is one with some complexity and one with little complexity.

Higher Complexity

Lower Complexity

As with the other three programs the computer is first rebooted. After which AutoCAD 2010 is loaded. First, the command 3DCONFIG is run to ensure all the proper options are turned on. Of concern is enabling hardware acceleration, turning “Enhanced 3D Performance” on, enabling the Gooch hardware shader, enabling per pixel lighting, and turning “Full-shadow display” on. Then as with the other programs each drawing was randomly rotated 5 times for one minute each time and the frame rates were recorded. Finally, the computer was turned off for the insertion of the next card. All of the GeForce cards were tested first, then the Quadro drivers were installed and all of the Quadro cards were tested.

The Results

The results of these tests are not what the reader would expect, especially given the wide price range of the products tested. First, it must be noted that all values reported are averages. In the case of frame rates, they are averages of averages. Again this technique is used in an attempt to eliminate potential flukes in the results. The full results files may be found here.

First up is Rendering Influence. It should be noted that in the render tests, the accuracy of the measurement is to the nearest 1 second. The decimal places are due to averaging.

The graph makes the results look much more drastic than they actually are. The difference between the best performer and the worst performer is only 3%. Even then the ranges of the raw data indicate. The most likely result for the 8800GT looking like the clear loser is that there may have been extraneous programs running in the background the others did not have. This is due to the 8800GT being tested first.

Of the 35 times this frame was rendered, it rendered in 11 seconds in all but 3 of them. Once the 8800GT rendered in 13 seconds, once the FX 3400 rendered in 12, and once the FX 560 rendered in 12. So 32 out of 35 times the frame rendered in 11 seconds. These results indicate that no meaningful data is being processed by the video card at the time of rendering. What is interesting is that restarting the computer could make a huge difference. There were times after a video card was changed out and a restart after resetting up DualView did not occur, the frame would render in 3 minutes and 30 seconds in mental ray and 19 seconds in Maya Software. So a fresh restart will do much more for render times than any video card can.

Again the graph accentuates the differences. In actuality there is only 1% difference between the “fastest” card and the “slowest” card. One could postulate that this almost microscopic trend could be attributed to rendering to the screen and thus some information is passed to the video card and those cards better equipped to handle said information faired better. If one were rendering to a file instead of to the screen, one would expect that the distribution of performance would be much more random or even.

The results above prove categorically…

Video cards have no real impact on render times!

(So, to all those newegg.com reviewers out there that seem to think a video card change can drastically improve your render times, it can not. The only way that could be true is if you were moving from an integrated graphics solution that shared system memory to a discreet solution that had its own memory. Such a move would free up system RAM and is the only conceivable way that a graphics card could improve your render times.)

That being said, it is not completely true. There was once an instance in Maya where Maya software refused to render some objects and mental ray rendered wrong. Maya Hardware renderer had to be used to render the sequence. The render nodes in the office, having only integrated graphics solutions, rejected the job, but all the computers with discreet solutions rendered the frames perfectly.

However, that is not the only instance a graphics card could improve render times. All G80 and later GPUs from NVIDIA are CUDA (Compute Unified Device Architecture) enabled. CUDA is a programming tool that will allow programs to tap the incredible parallel processing power of graphics cards.

Rendering is an extremely parallel process and could be influenced greatly by the ability to tap into the GPU.

For those that do not understand rendering, here is why this is important. The circled area in the picture above shows 4 “buckets.” These represent the four cores of the CPU on the computer. Ideally, one wants as many buckets to be rendering simultaneously as possible. Right now the best way to do that is distributing the frame over a network to let multiple computers work on it at once. The problem is that to get 40 buckets, 10 computers and 10 copies of the program are required. With the ability to use the GPU, one could easily get 200 buckets from just one computer. Though these would be slower than the CPU, the sheer quantity of them can make up for the lack of physical speed. If distributed bucket rendering is enabled and the GPUs of other computers are allowed to join in, frames that took hours could take minutes or seconds to render.

There is currently a plugin made by Art and Animation Studio called FurryBall, which is a plugin that allows final render quality images to be updated in real time in the viewport. Furthermore, the makers of mental ray recently demonstrated being able to produce high quality frames in terms of frames per second instead of seconds per frame. There is a planned release by mental images on January 31, 2010 for mental ray 3.8 with iray which will allow GPU rendering in Maya. There will be more on the implications of that in the conclusion.

Now for some tests that actually use the power of the graphics card.

Maya

Here the G80 and higher cards fair better than the G70 derivatives. There is no statistical difference between the FX 1700 and the GTX 280 and the 8800GT performs 23% better than the best G70 card. It seems odd that the 7950GT is out performed by the lower clocked 7900GT. However, the gaming cards are well mixed in with the professional cards so there is no clear winner from this test.

Here again the FX 1700 leads the pack but only with a slim 3% margin. Again the cards that were made after the G80 separate themselves from the G70 derivatives by a substantial margin, an average of 38%.

This test shows the Quadro cards separate themselves from the GeForce cards. In an odd twist it appears as though the better the card is, the worse it does on this test. On average the workstation cards did 8% better than the gaming cards.

With high quality turned off, all of the frame rates become completely workable. The GeForce cards seem to edge out the Quadro cards here, but not by much; the difference between the best and the worst cards is only 9%. Considering the price ranges and manufacturing dates, this difference is actually a fairly small window.

It is interesting to note that, over all when gaming cards were employed, CPU usage never went above 50% while in the viewport and never above 25% when a Quadro was employed. There could be some significance to the fact that the program is CPU limited in this manner. It is even more interesting to note that Maya is the only program to get up to 50% CPU usage in the viewport all off the other programs limited themselves to 25% CPU usage.

3ds Max

In 3ds Max the gaming cards take the crown with an average 43% edge over the professional cards. There is an interesting note about drivers with 3ds Max. NVIDIA makes a “performance” driver for Quadro cards. The first time the test was run on a Quadro, it was run on the FX 1700 using the performance drivers. Just to be thorough, the test was run again using the regular Direct3D drivers and there was no significant difference. OpenGL was also tested but the results were so disastrous they were discarded. It was decided then that the tests would continue only using the “performance” driver since it would probably have the best chance of bringing out the highest performance for the cards. However, when the FX 560 and FX 3400 were tested using the “performance” driver, the frame rates were horrendous (5 and 1 frames per second respectively). This was cause for concern so the Direct3D driver was turned on instead and the frame rates returned to normal.

The results for this test are very mixed. Instead of accentuating or confirming the results of the previous test, this test seems to only make the picture murkier. One possible reason for this is that the test was not run enough times for the differences in such high frame rates to average out enough. Furthermore, unlike the previous tests, in which both the application (Maya or 3ds Max) and FRAPS agreed, the frame rate reported by 3ds Max in this test was always much higher than what FRAPS recorded. For example, the FX 560 reported an average 700 FPS (frame per second), the FX 1700 reported 350 FPS and the 7950GT reported 500 FPS.

Revit

The GeForce cards put themselves ahead of their professional counterparts. Why the 8800GT and 7950 are ahead of the rest by such a large margin is unexplainable. Giving an average difference between the two groups would seem wrong here since there is such an unexplainable difference in performance levels. One thing to note is that enabling Direct3D in Revit improved performance considerably to the levels that are reported in the chart.

Here again the gaming cards beat out the Quadro cards. The average performance disparity here is about 15%.

AutoCAD

The results of this test are particularly ironic since AutoCAD was chosen to emphasize the abilities of the Quadro cards. AutoCAD 2010 actually has a feature that notifies the user that it recognizes the graphics card and automatically updates the settings accordingly. Of course, EVERY card tested was “not certified” but all of the tests were completed without incident. All of the Quadro cards were able to use the AutoCAD performance driver. The only real result of which was to allow an option to smooth lines in the viewport to appear. Since the GeForce cards had no such option, it would have been unfair to force the Quadros to take on extra work. Unlike with 3ds Max none of the cards exhibited an issue with the performance driver. However, again the GeForce cards out maneuver the Quadros to the tune of a 19% performance advantage.

Again we see similar results when compared to the previous test, albeit with a less conventional opinion on which card is the best. The gaming cards hand it to the workstation cards once again. While there seems to be no real difference between the gaming cards, the FX 1700 shows a considerable gain over the other two Quadro cards.

Conclusions

Many findings can be drawn from the results of these tests, although probably with several caveats. Some may argue that the tests did not employ program aspects that make the professional cards shine (if any exist), but the tests performed for this article use real files from real companies for current projects (unless where noted). These tests are not a synthetic amalgamation of all of the possible features that can be used in their respective programs, but they are a realistic sampling of how the programs are used.

First, the Quadro card architecture is actually quite well designed. When transistor count and clock speed are considered, the Quadro cards appear to be at a severe disadvantage. However, especially in Maya, the FX 1700 shows itself to be at least equal to if not better than the 8800GT and GTX 280. Unfortunately the price premiums for this efficiency do not warrant purchase unless the money you are saving on power supplies is somehow able to offset the extra cost of a professional graphics card.

Of course, the real finding, the one that NVIDIA and AMD dread, is that in real world practical applications for their graphics cards, there is no significant performance degradation by using a gaming card instead of a professional card. In fact, there is sometimes a performance gain to be had by using a gaming card. Though, the latter part of this conclusion is not necessarily true. Due to availability, this article was unable to pit direct core derivatives against each other. Ideally, the Quadro FX 1700 would have been foiled by a G84 version of a GeForce 8600GT, the 8800GT would have been matched with an FX 3700, and the GTX 280 would have been compared to a FX 4800. However, even if it were possible to perform such tests the price disparities would have always favored the GeForce series. For example, the FX 3700 would need to offer a performance gain of around 270% to make up for the $790 prices tag when compared to the $212 8800GT. Therefore when looking at price point, the GeForce series, more often than not, offers a better value to all customers, not just gamers.

It also has to be said that the same is not necessarily true for AMD/ATI products as none of their products were tested in the course of writing this article. Though, the chance that their GPUs do not exhibit similar characteristics is remote.

Furthermore, this article is not meant to imply that there are no good uses for professional graphics cards, there are. This article was just meant to investigate the uses for which most of the cards are used for.

Future considerations for this article would be to test AMD based cards as well, compare similar core technology, and test professional compositing programs like Adobe Premier and Eyeon Fusion.

Recommendations

Software developers should look into better ways to utilize multi-CPU systems. The fact that Maya was the only program to ever use more than one core when not rendering is a little disheartening. Graphics display capabilities could grow by light years and poor performance would still be had because of a CPU limitation. Revit 2010 actually has a switch that will enable the use of more cores. By default the switch is off because enabling it actually degrades performance on dual core systems and Autodesk calculates that most of the Revit users are on dual core systems. The switch was tried for the purposes of this article but resulted only in crashing Revit.

NVIDIA really needs to reconsider their pricing discrimination strategy for the Quadro line of cards. If further investigations confirm the findings of this article, the opportunity for arbitrage will be enormous. Thus, NVIDIA has two options.

One option is to lower the price of the cards in the Quadro line to more accurately reflect their performance with their GeForce cousins. The probability of this happening is fairly low, though if they take no action at all it may happen anyway due to a drop in demand. The best approach for this will be to consider power supply cost savings along with performance, though that could only realistically make the cards about $80-$100 more expensive than the GeForce counterparts.

The second option is to take a page out of the Intel playbook and force professionals to buy the Quadro line at the current inflated prices (No dual or quad socket LGA775s? Really?). With the advent of GPGPU (General-purpose computing on graphics processing units) especially through CUDA, NVIDIA is in a good position to force all those that would like to use GPGPU to do it only on Quadro cards. Unfortunately, NVIDIA has made a concerted effort to ensure everyone that all G80 GPUs and later are CUDA enabled. Such a move to Quadro only CUDA software could create a public relations fiasco. NVIDIA would be hard pressed to buy every CUDA plugin and sell it with an overpriced card like they did with Adobe Premier. Furthermore, they have to look out for AMD’s similar solution and if AMD is willing to let GPGPU to happen on its gaming cards then NVIDIA’s “professional only” approach would cost them. Therefore, the only realistic option is to lower the price of Quadro card enough to induce more purchases and do everything in their power to compel CUDA application writers to create specifically for Quadro FX cards. Within a couple years though, there will probably be plenty of free ways to circumvent such measures. NVIDIA will probably implement a scheme similar to this to maintain the profit levels they have.

Two other, though unrealistic, options involve increasing Quadro performance to meet the price point, or to raise the price of GeForce cards to put them in line with the performance of the Quadro cards. The latter option will never happen unless AMD illegally agrees to a price collusion scheme and is willing to raise the prices of their gaming cards. The first option is unrealistic because the performance that is in the professional cards is great, but there are much cheaper solutions offered by the same company.

Consumers for the time being should consider power requirements when purchasing a graphics card for their professional computer. If you have enough power, a gaming card can actually net a performance gain and a profit.